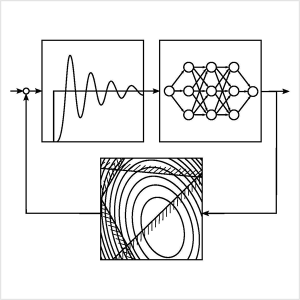

Model predictive control is widely used to keep such processes as HVAC systems in buildings or oil refining processes operating in stable fashion at minimal cost. Machine learning algorithms have become ubiquitous for analyzing data and making predictions and decisions based on this data. Relationships between the areas of control, learning, and optimization have always been strong, but have recently been extending and deepening in surprising ways. Optimization formulations and algorithms have historically been vital to solving problems in control and learning, while conversely, control and learning have provided interesting perspectives on optimization methods. The intersection of these three areas – control, learning and optimization – is the focus of a workshop to be held in Los Angeles, California, February 24-28, 2018.

Model predictive control is widely used to keep such processes as HVAC systems in buildings or oil refining processes operating in stable fashion at minimal cost. Machine learning algorithms have become ubiquitous for analyzing data and making predictions and decisions based on this data. Relationships between the areas of control, learning, and optimization have always been strong, but have recently been extending and deepening in surprising ways. Optimization formulations and algorithms have historically been vital to solving problems in control and learning, while conversely, control and learning have provided interesting perspectives on optimization methods. The intersection of these three areas – control, learning and optimization – is the focus of a workshop to be held in Los Angeles, California, February 24-28, 2018.

“The workshop provides an exciting opportunity for researchers to investigate relationships between the three areas and to identify new ways in which they can be used, for example in robotics and other cyberphysical systems,” said Stephen Wright, a professor at the University of Wisconsin – Madison, a principal investigator in the Argonne-led MACSER project, and one of the committee members organizing the workshop. Wright mentioned that a particular feature of the workshop was inclusion of participants from the engineering control community, which has largely been excluded from the recent excitement concerning the use of reinforcement learning in tasks traditionally performed by control systems. “We believe that engineering perspectives, which encompass both mathematics and practical considerations, are valuable in prioritizing future research at the intersection of control, learning, and optimization.”

“MACSER users will find the workshop of interest because of the role that applied mathematics plays in model predictive control,” said Mihai Anitescu, who leads the MACSER project. He pointed to the example of developing a thermal model of a building. “New algorithms are needed that can handle the trade-offs required in optimizing thermal comfort and minimizing energy consumption. Such algorithms must take into consideration variability and uncertainty in dynamical systems; and the solutions must be scalable to handle the complexities involved in real-time decision-making,” he said.

The workshop on “Intersections between Control, Learning and Optimization” is sponsored by the Institute for Pure & Applied Mathematics at the University of California, Los Angeles. For more information about the workshop, visit the website: http://www.ipam.ucla.edu/programs/workshops/intersections-between-control-learning-and-optimization/?tab=overview.