Last Update: 10-Jan-2006

Kazutomo Yoshii <kazutomo@mcs.anl.gov>

NOTE:

The BlueGene IO Node consists of IBM custom PowerPC based CPU and memory. It's a dual CPU chip that has two 32-bit PowerPC 440, L2 and L3 cache, communication devices such as Ethernet, tree, torus and so on. PowerPC 440 is originally designed as an embedded CPU which doesn't have proper SMP support. i.e no instruction level atomic operation is provided. You may need to invalidate L1 cache explicitly to share data between each cpu or use a special purpose buffer called lockbox to protect a critical regsion.

Both ION node and Compute Node(CN) uses the same custom CPU. The difference between CN and ION is its network configuration. For example, ION has Ethernet but CN doesn't. CN has torus but ION doesn't.

All devices are memory mapped device, so there is no PCI bus or other peripheral bus on the board. Also there is no persistence storage device such as hard-drive on ION. OS image is loaded remotely to the main memory on the CPU board via JTAG interface. Linux is the primary OS for BlueGene ION. ION Linux is basically a 32-bit PowerPC Linux but its booting mechanism is different than others and it also has some BlueGene specific patches. Currently Linux running on ION only uses the first CPU, while the second CPU is busy looping.

Booting process is very hardware dependant, even under the same CPU. Of course, BlueGene ION Linux has a different booting process than other architecture. For example, it's different from Linux running on Apple Macintosh. Currently there is no documentation about how ION Linux kernel boots up. In this article, we describe the ION Linux booting mechanism.

The three ELF formated files are associated with the ION booting process ; mmcs-mloader.rts , zImage.elf and ramdisk.elf.

The size of mmcs-mloader.rts is approx. 120KB. This program does memory initialization and other preparation tasks before Linux kernel starts. zImage.elf is an ELF formated file which contains Linux kernel. Technically, Linux loader program in the program section and Linux kernel in the data section.

The default image can be found at /bgl/BlueLight/ppcfloor/bglsys/bin/. You can use your own binary image instead of default if necessary and you can built it. The ZeptoOS project help you building ION Linux kernel and Ramdisk. Developing mmcs-mloader.rts is possible if you have a deep understanding of hardware.

A Linux kernel image(zImage.elf) and a Ramdisk image(ramdisk.elf) are already mapped to the main memory before Linux starts. Those images are remotely copied from the BludeGene service node after mmcs-mloader initializes the main memory (* need to make sure).

Let's take a look at the following table.

| Linux Kernel ELF image (zImage.elf) | entry point : 00800000 | ||

| Section | Address | Size | comment |

| .text | 00800000 | 0044c0 | start,relocate and decompress_kernel jump to the next entry point |

| .data | 00805000 | 0d6000 | compressed kernel image. decompressed image will be mapped to [0x0, 0x400000). |

| Ramdisk ELF image (ramdisk.elf) | |||

| Section | Address | Size | comment |

| .data | 01000000 | 0b434c | gziped ext2 Ramdisk image. Note: decompress_kernel does not decompress ramdisk |

On Linux kernel ELF image, text section is mapped to 0x00800000 and data section is mapped to 0x00805000 on the main memory. In a similar way, data section of Ramdisk ELF image is mapped to 0x01000000. Ramdisk ELF image just contain a gzipped ext2 disk image which contains initial Ramdisk.

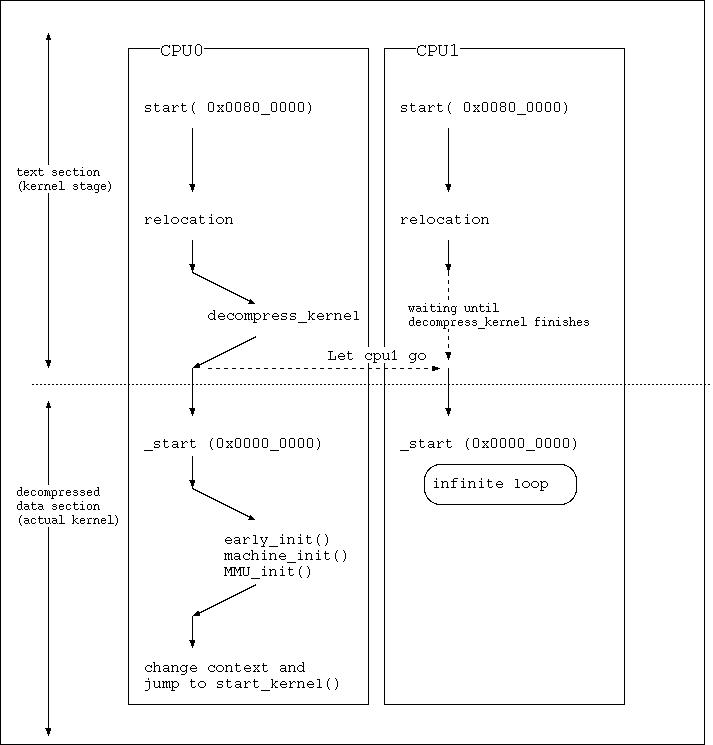

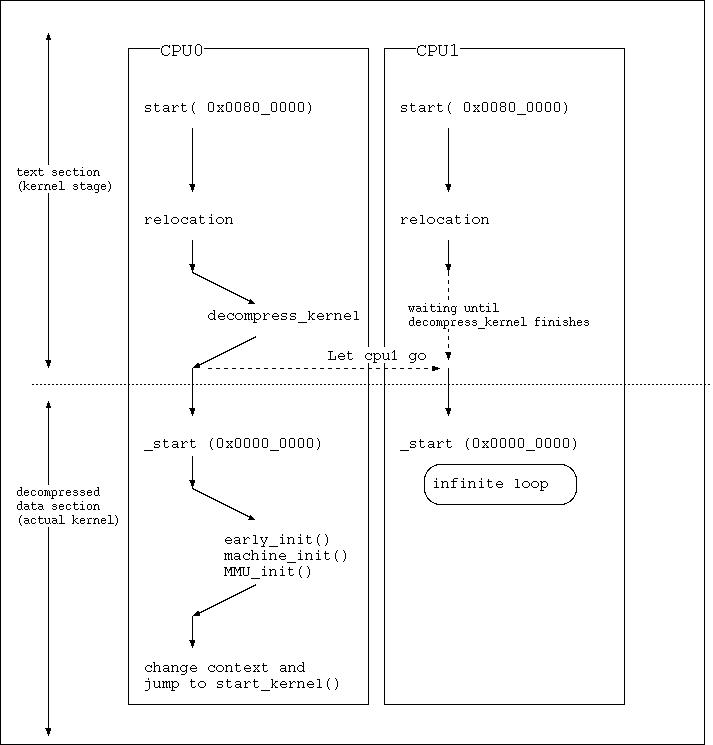

Now it's ready to boot. The following figure shows an overall flow until start_kernel() starts. At the very early stage, both CPU are running until infinite loop in _start ( I don't know why it does not make second CPU stop at the beginning ).

The entire process starts from an executable located at 0x800000 which is the entry point. (Presumably this program invocation is initiated remotely by the service node using JTAG interface). Program in the text section of kernel ELF image is executed. This program located at 0x800000 comes from "start" @ arch/ppc/boot/simple/head.S . The size of head.S is approx. 2.9KB but mostly ifdefs and not effective. So the assembly code would like below ( disassemble code is a little bit complicated than this ).

| start @ head.S | |

start:

mflr r3

b relocate

| |

The above code saves the address of entry point to the r3 register and jumps to "relocate".

The function relocate is located in arch/ppc/boot/common/relocate.S . The original purpose of relocate.S to relocate the boot code because of physical memory limitation ( need to explain more clearly later ). On BlueGene ION, however, it does not require the memory relocation ( because the mloader already arranges ).

Unlike the name of function implies, the relocation code is not effective for BlueGene. In other words, executable image stays in the same location. The function relocate simply invokes the function decompress_kernel and jump to 0x0 where _start is placed in. In this stage both CPU are running, so we need to avoid running decompress_kernel on both CPU. The following code piece does necessary thing for the second CPU.

We can check whether the current thread is on the second CPU or not using special purpose register. "mfspr R, 0x11E" is the instruction to check the second CPU. If it's on the second CPU, a non-zero value is stored to register R.

In the following code, the second CPU does busy loop until a value stored in bgl_secondary_go becomes non-zero. Note that the instruction "dcbi" invalidates data cache block. Otherwise, it doesn't get out from the busy loop. Actually the value stored in bgl_secondary_go is update by the first CPU thread after returning from decompress_kernel.

As mentioned previously, BlueGene dual core CPU doesn't have proper synchronization mechanism, so it needs to invalidate cache explicitly when each CPU is communicating.

| Handling the second CPU | |

li r0, 0

mfspr r4, 0x11E

cmpw r4, r0 /* check second CPU */

bgt bgl_secondary_waitloop /* if second CPU */

b relocate_continue /* if first CPU */

.data

.align 5

.globl bgl_secondary_go

bgl_secondary_go:

.long 0 /* condition is stored here */

.previous

bgl_secondary_waitloop:

GETSYM(r9, bgl_secondary_go)

2: dcbi 0,r9

lwz r6,0(r9)

cmpwi r6,0

beq 2b

li r4,0

li r6,0

li r9,0x0000

mtlr r9

blr /* jump to _start */

| |

This function is written in C. As the function name implies, this function expands a compressed kernel image and place it to the memory region started from 0x0. It also gets the size of Ramdisk, kernel command line and put them to the bi_record structure.

The first console messages come from this function.

loaded at: 00800000 008DD2A8 zimage at: 008057BF 008D9F7B avail ram: 00400000 00800000 Linux/PPC load: console=bgcons root=/dev/ram0 rw Uncompressing Linux...done. Now booting the kernel |

The function _start is placed by decompress_kernel and its memory location is 0x0. It's jumped from the function relocate and both CPU thread enters this function but it make the second CPU thread infinite loop immediately. From now on, only one CPU is running.

This functions does very low level initialization such as invalidate all TLB entries and install the Linux kernel base mapping.

Eventually the function jumps to start_kernel which starts multitasking, virtual memory and other regular kernel services by the following instructions. start_kernel is located in init/main.c .

| Change context and jump to start_kernel | |

lis r4,start_kernel@h

ori r4,r4,start_kernel@l

li r3,MSR_KERNEL

mtspr SRR0,r4

mtspr SRR1,r3

rfi

| |

After start_kernel(), it's essentially same as other regular Linux kernel.

Presumably, there is some kind of program running at the service node which issue JTAG command to send data to nodes or receive data from nodes and start executable on node.

A program image such as Linux kernel is wrapped up with ELF format so that the loader program can know the start address, size of each object and the entry point(jump address). It uses JTAG interface to send a program image to specified IO Node(s) or Compute Node(s) via JTAG. JTAG interface acts like RDMA. A program running at each node can also notify to the loader program with JTAG.

The loader program sends mmcs-mloader.rts to ION(s) and start it. mmcs-mloader.rts is loaded to very upper address (0xffffca80 to 0xffffffff) and started from 0xfffffffc (see the table below). It seems that paging unit is already turned on and firmware set up some page directories for upper memory region. Probably, other memory region is not available at this time or not guaranteed.

mmcs-mloader initializes the main memory and sets up page directories for next program such as Linux kernel. mmcs-mloader also does other tasks such as global interrupt initialization but we didn't figure out what mmcs-mloader does completely.

mmcs-mloader notifies the status to the JTAG loader program via mailbox mechanism implemented upon JTAG.

mmcs-mloader eventually goes in infinite loop, so it doesn't jump to Linux kernel start up code directly. It looks mmcs-mloader.rts is a boot loader program like grub, lilo but it's not. It initializes the main memory and other necessary things for Linux kernel or compute node kernel.

| mmcs-mloader.rts | entry point : fffffffc | ||

| Section | Address | Size | comment |

| .text | ffffca80 | 000d28 | eventually infinite loop |

| .resetpage | fffff000 | 0001f8 | eventually "b ffffca84" |

| .resetvector | fffffffc | 000004 | only contains "b fffff000" |